Written by Fola Yahaya

Brought up on a diet of film classics, I used to fantasise about an alternate reality in which I was walking down the red carpet en route to receiving the Palme d’Or for my magnum opus. However, lacking any discernible creative talent and acutely aware of the terrible economics of film-making, I chose to set up a translation agency – ironic, given the devastating impact AI is now having on that particular industry.

So, I was genuinely excited by the creative possibilities that text-to-video generators, such as OpenAI’s Sora, offer to the creatively challenged. While Sora has only been available to a select band of ‘creators’ due to ethical considerations, competitors such as Runway and, more recently, a host of Chinese companies such as Kling and Hailuo have brushed aside such concerns and opened their tools to the masses.

With some unexpected time to kill over the weekend, I tried out Hailuo (formerly Minimax), a Chinese text-to-video generator. On a scale of zero to 100, my film-making skills hover around zero. However, with a few mouse clicks, Hailuo seemingly managed to bump me up to a solid 20.

Struck down with blank page syndrome, I asked ChatGPT to recreate some fighting scenes in the style of Martin Scorsese’s black and white masterpiece, Raging Bull. As a topical twist, I asked it to use Vladimir Putin and Volodymir Zelenskyy as the main protagonists. Hailuo then promptly 😉 created around 15 six-second scenes of cinematic weirdness.

The video below was then put together with Elevate, a web-based video editor, and Udio, an AI music generator.

Okay, my creation is clearly not going to get me to Cannes. The lighting is weird, Putin does some odd things with his hands, and the characters are all over the place. And yet, for an hour’s work, it isn’t awful. Moreover, I’m confident that if I had a good week and an ounce of talent, I could learn how to create the right prompt to ensure a decent story, maintain continuity, and use interesting camera angles, ultimately levelling up my film-making skills to a solid 25. (See the film below, for example, which was clearly made by someone who knows what they’re doing. Yes, it’s still evidently AI-generated, but hey, it’s not bad for five days of work and an outlay of apparently less than $50).

So what were my key takeaways from my weekend of playing with Hailuo?

My experiments with Hailuo reinforced my conviction that we all need to lean in, play with AI toys and learn how retool our processes for the near future.

Moreover, even though film-making, especially in developing countries, is a significant part of my business, I’m not rethinking my business model.

This is because even as AI transitions from toy to tool, there will always be a significant market and premium for authentic stories well told.

When we pivoted a few years ago from being a translation agency to a full-service communications agency, we made the classic mistake of signing up for a bunch of tools that claimed to help us manage media relations for our clients. One of them, Cision, offered access to a global database of journalists who, on the surface, we could spam with client press releases. Like many things in business, tools that claim to automate very human processes are rarely effective, and we cancelled about 10 months into an annual subscription for a service that had been, frankly, useless.

Sadly, we (or rather, I) hadn’t read the small print about a 90-day notice period, and Cision gleefully sent me a bill for a $15,000 annual subscription that they had not so kindly auto-renewed. Very long story short, both parties settled on a $2,500 payment. Throughout the process, AI was critical. It:

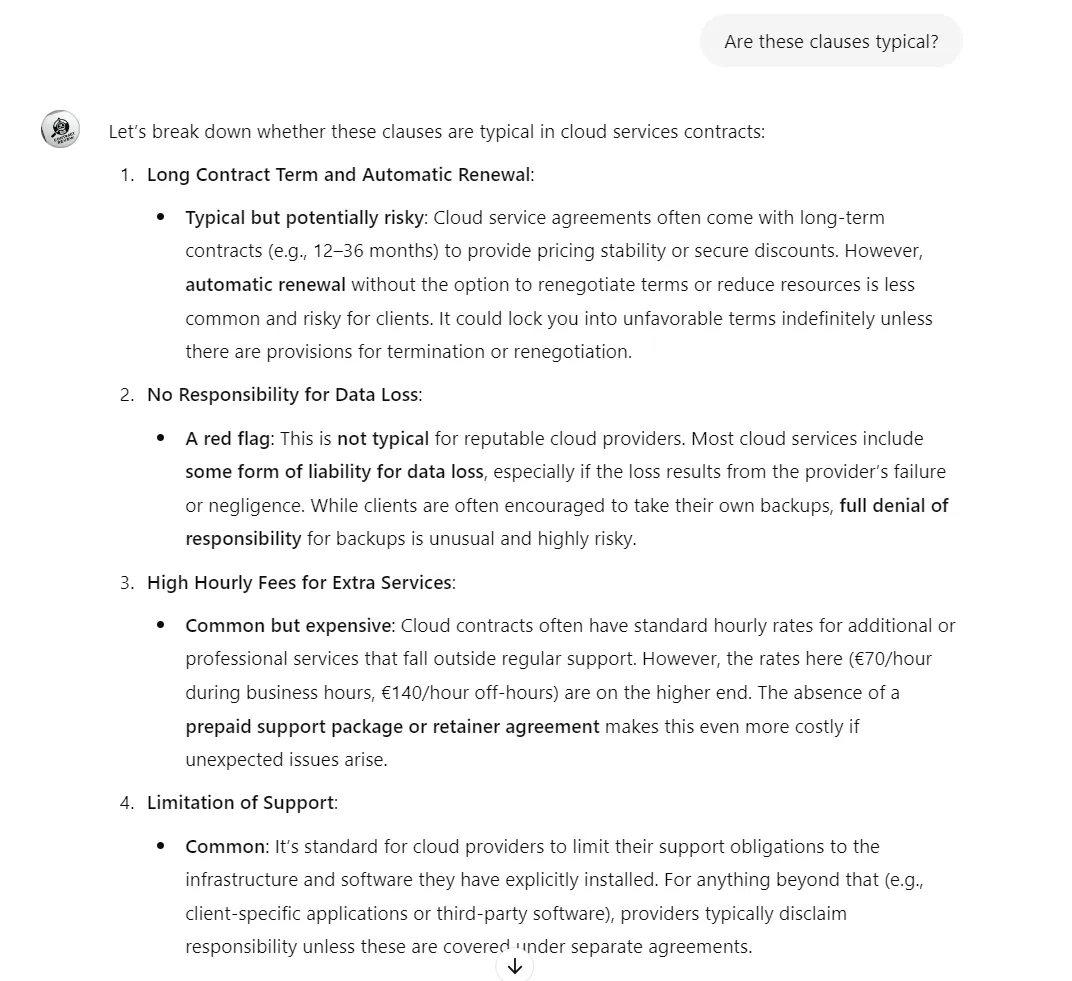

The lesson I learned from this wasn’t just to always read the fine print – I’m not a lawyer, so it would all be Greek to me – but rather to use AI. That’s exactly what I did when I had to sign a dense and complex cloud services contract this week. I scoured the OpenAI GPT Store for a contract reviewer, uploaded the contract, and sat back while the robot did its beautiful thing. See the outcome in the image below.

I duly sent these back to the provider, who modified the terms and ultimately reduced my risk. The specific GPT I used was called Contract Reviewer, but the OpenAI GPT Store has 100,000 mini ChatGPTs trained in specific domains and functioning as emergent agents.

Better still, you can create your own GPTs by uploading your documents, favourite books or curricula – with the obvious caveat that, irrespective of what OpenAI promises, your data may enter the public domain. Configure your ChatGPT settings with your particular parameters, such as jurisdiction, company or personal details, and preferences.

By creating your own mini agents, you’ll experience the shift from AI being a mere toy to a truly useful tool that can add real value.

I find Sam Altman’s five-level typology of AI progress vague and hard to relate to. Instead, I propose a much more relatable, four-level scale of progression:

Though the term ‘tyrant’ is clearly negative, it effectively conveys the inherent risks of allowing AI to operate processes autonomously.

That’s all for this week. Subscribe for the latest innovations and developments with AI.

So you don’t miss a thing, follow our Instagram and X pages for more creative content and insights into our work and what we do.

Network Hub, 300 Kensal Road, London, W10 5BE, UK

We deliver comprehensive communications strategies that deliver on your organisation’s objectives. Sign up to our newsletter to see the highlights once a quarter.